Introduction:

Machine learning algorithms thrive on data, and the quality of the input significantly impacts their performance. One crucial aspect of data preprocessing involves handling categorical data, which is non-numerical information such as labels or names. In this article, we delve into the significance of One-Hot Encode in machine learning, exploring the nature of categorical data, the challenges it poses, and the transformative solutions it brings.

Why One-Hot Encode Data in Machine Learning?

One-hot encoding is a widely used technique in machine learning, specifically designed to represent categorical data. Categorical data, which encompasses information divided into distinct categories or groups, poses a challenge in many machine learning algorithms that prefer numerical data for processing. Techniques like one-hot encoding become essential to convert categorical variables, such as colors, product types, or country names, into a format suitable for algorithms like neural networks, decision trees, and support vector machines.

The Significance of One-Hot Encoding in Machine Learning

One-Hot Encode, as a transformation method, plays a pivotal role in machine learning algorithms. It converts categorical data into a binary matrix, with each category represented by a unique binary code. The binary matrix ensures that only one bit is ‘hot’ (set to 1) for a specific category, while the others remain ‘cold’ (set to 0). This transformation enables machine learning models to effectively interpret and learn from categorical features, contributing to the model’s ability to make meaningful predictions or classifications. Consequently, one-hot encoding significantly enhances the performance and interpretability of machine learning models when handling categorical data.

One-Hot Encode: Categorical Data Overview

Categorical data constitutes a distinct type of data characterized by qualitative attributes, grouping information into categories or classes. This data type diverges from numerical data, which conveys quantities on a continuous scale. Examples of categorical data range from gender distinctions (male or female) to color categories (red, blue, green), and even types of animals (dog, cat, bird). Within the realm of categorical data, there are two key classifications: nominal and ordinal. Nominal data lacks inherent order or ranking, while ordinal data introduces a meaningful hierarchy among categories. Analyzing this data involves employing techniques such as frequency counts, percentages, and visual aids like bar charts, pie charts, to unravel distribution patterns and relationships.

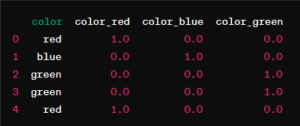

import pandas as pd

# Example dataset with categorical variable

data = {'color': ['red', 'blue', 'green', 'green', 'red']}

df = pd.DataFrame(data)

# Perform one-hot encoding using pandas get_dummies function

encoded_df = pd.get_dummies(df, columns=['color'])

print("Original DataFrame:")

print(df)

print("\nOne-Hot Encoded DataFrame:")

print(encoded_df)

One-Hot Encode: Significance in Data Science and Machine Learning

The proper handling of categorical data holds paramount importance in diverse fields, including statistics, data science, and machine learning. Effective analysis requires an understanding of methods such as one-hot encoding, particularly in tasks demanding accurate classification and pattern recognition. One-hot encoding transforms categorical variables into a format suitable for machine learning algorithms, enhancing their ability to comprehend and utilize this information effectively. As an integral part of data preprocessing, one-hot encoding ensures that categorical data becomes a valuable asset in generating meaningful insights and facilitating informed decision-making processes within the respective domains.

Challenges of Categorical Data in Analysis and Machine Learning

Categorical data presents unique challenges in statistical analysis and machine learning due to its qualitative nature. In contrast to numerical data, which can be easily measured and subjected to mathematical operations, categorical variables represent distinct categories or groups with no inherent numerical meaning. The absence of quantitative value makes it challenging to perform computations like calculating means or variances directly. Ordinality issues further complicate matters, where the order of categories may lack definition or not correspond to a meaningful numerical scale. Dealing with high-dimensional representation in categorical variables with numerous categories introduces the “curse of dimensionality,” complicating model training and interpretation.

Mitigating Challenges through One-Hot Encode and Other Techniques

To address the challenges posed by categorical data, various encoding techniques, including One-Hot Encode or label encoding, are employed. These techniques serve to convert categorical data into a format suitable for analysis and modeling, overcoming issues related to its qualitative nature. Despite the complexities, categorical data is prevalent in many real-world scenarios. Effectively handling it becomes crucial for extracting accurate and meaningful insights in data-driven applications. One-Hot Encode, among other methods, plays a pivotal role in transforming categorical data into a numerical representation that facilitates robust statistical analysis and machine learning model development.

One-Hot Encode: Importance in Data Conversion

Converting categorical data into numerical format is a vital preprocessing step in machine learning and data analysis. Many algorithms demand numerical input, leading to the adoption of various encoding techniques. One common approach is Label Encoding, preserving ordinal relationships. Alternatively, One-Hot Encoding creates binary columns for each category, indicating presence or absence. The selection between these methods depends on the data nature. For nominal data, One-Hot Encoding is preferred to avoid introducing misleading order, while Label Encoding suits ordinal data. Careful consideration is necessary to ensure the accuracy of the resulting numerical representation.

Choosing Between Label Encoding and One-Hot Encoding

In the realm of categorical data transformation, the dilemma often lies between Label Encoding and One-Hot Encoding. Label Encoding assigns numerical labels while preserving ordinal relationships. In contrast, One-Hot Encoding employs binary columns, representing presence or absence of each category. While Label Encoding is apt for ordinal data, One-Hot Encoding is favored for nominal data to prevent the imposition of a false sense of order. The decision hinges on the categorical variable’s nature and the machine learning task requirements. It is crucial to weigh the implications of each encoding technique to accurately capture the original categorical data’s information.

Perform label encoding and one-hot encoding using the popular scikit-learn library.

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

import pandas as pd

# Sample dataset

data = {'Category': ['Red', 'Blue', 'Green', 'Green', 'Red', 'Blue']}

df = pd.DataFrame(data)

# Label Encoding

label_encoder = LabelEncoder()

df['Category_LabelEncoded'] = label_encoder.fit_transform(df['Category'])

# One-Hot Encoding

one_hot_encoder = OneHotEncoder(sparse=False)

one_hot_encoded = one_hot_encoder.fit_transform(df[['Category']])

one_hot_encoded_df = pd.DataFrame(one_hot_encoded, columns=one_hot_encoder.get_feature_names(['Category']))

print("Original DataFrame:")

print(df)

print("\nLabel Encoded DataFrame:")

print(df[['Category_LabelEncoded']])

print("\nOne-Hot Encoded DataFrame:")

print(one_hot_encoded_df)1. Integer One-Hot Encode:

Integer encoding involves assigning a unique integer to each category. While this method provides a numerical representation, it introduces an inherent ordinal relationship between the categories, potentially misleading the model. For instance, if we encode colors as 1 for red, 2 for blue, and 3 for green, the model might incorrectly assume that green is “greater” than red.

2. One-Hot Encode:

One-Hot Encoding, on the other hand, offers a more nuanced solution. It creates binary columns for each category and represents the presence or absence of a category with a 1 or 0, respectively. This method preserves the categorical nature of the data without introducing false ordinal relationships. Each category becomes an independent feature, contributing to the overall flexibility of the model.

Further Reading:

Delving deeper into the intricacies of data preprocessing and encoding techniques can significantly enhance your understanding of their impact on machine learning models. Explore resources on feature scaling, normalization, and other advanced preprocessing methods to gain a comprehensive view of the data preparation landscape.

Summary:

In the realm of machine learning, handling categorical data is a pivotal step towards building robust and accurate models. One-hot encoding stands out as a preferred method, allowing algorithms to seamlessly integrate non-numeric information. By grasping the nuances of categorical data and mastering encoding techniques, you empower your models to extract valuable insights from diverse datasets.

In conclusion, the journey from categorical to numerical data is a transformative process that lays the foundation for successful machine learning endeavors. Embrace the power of one-hot encoding, and watch as your models navigate the intricacies of diverse data with precision and accuracy.